4. The Complex Method¶

The Complex method was first presented by Box [1], and later improved by Guin [2]. The method is a constraint simplex method, hence the name Complex, developed from the Simplex method by Spendley et al [3] and Nelder Mead, [4]. Similar related methods go under names such as Nelder-Mead Simplex. The main difference between the Simplex method and the complex method is that the Complex method uses more points during the search process.

The first part of this chapter describes the original Complex Method followed by a description of the Complex-rf, which is development of the Complex Method.

4.1. The Complex Method¶

In the Complex method, the word complex refers to a geometric shape with  , points in an n-dimensional space.

These k points are known as vertices of the complex.

To make the explanation of the algorithm simple we wiill focus on a two-dimensional space and a complex consisting of four vertices, i.e. n = 2 and k = 4.

, points in an n-dimensional space.

These k points are known as vertices of the complex.

To make the explanation of the algorithm simple we wiill focus on a two-dimensional space and a complex consisting of four vertices, i.e. n = 2 and k = 4.

Typically the number of points in the complex (k), is twice as many as the number of design variables (n).

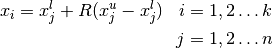

The starting points are generated using random numbers. Each of the k points in the complex could be expressed according to [5] where  and

and  are the upper and lower variable limits and

are the upper and lower variable limits and  a random number in the interval [0, 1].

a random number in the interval [0, 1].

(1)¶

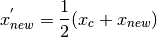

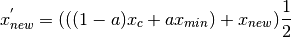

The objective is to minimize an objective function  . The main idea of the algorithm is to replace the worst point by a new point obtained

by reflecting the worst point through the centroid of the remaining points in the complex,

as illustrated in Figure 1. The worst point corresponds to the maximum value of the function vector

. The main idea of the algorithm is to replace the worst point by a new point obtained

by reflecting the worst point through the centroid of the remaining points in the complex,

as illustrated in Figure 1. The worst point corresponds to the maximum value of the function vector

. The centroid,

. The centroid,  , of the points in the complex excluding the worst point

, of the points in the complex excluding the worst point  , could be

calculated according to:

, could be

calculated according to:

(2)¶![x_{c,j} = \frac{1}{k-1} \left[ \left(\sum_{i=1}^k x_{i,j}\right) - x_{w,j}\right] \;\; i = 1,2 \ldots n](_images/math/075fd742911378c35c51a81af475f51d5ea45c8e.png)

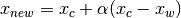

The new point is now calculated as the reflection of the worst point through the centroid

by a factor

(3)¶

The reflection coefficient  should equal 1.3 according to Box. If the new point is better

than the worst,

should equal 1.3 according to Box. If the new point is better

than the worst,  is replaced by

is replaced by  and the procedure starts over by reflecting the point

that is worst in the new complex.

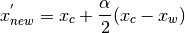

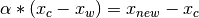

If the new point is still the worst it is moved halfway towards the centroid according

to:

and the procedure starts over by reflecting the point

that is worst in the new complex.

If the new point is still the worst it is moved halfway towards the centroid according

to:

(4)¶

This Equation (4) ould be rearranged by substituting  from equation (45) yielding

from equation (45) yielding

(5)¶

The procedure of moving the worst point towards the centroid is repeated until the new points stop repeating as the worst.

Figure 1 :Working principle of the Complex method for a problem with 2 design variables and 4 vertices in the complex. The curves represent contour lines of the objective function with the optimum to the right.

The procedure outlined is carried out until the complex has converged or until a predescribed number of evaluations is reached. Convergence could be measured either in the

function space or in variable space. In the function space the complex is considered

converged if the difference between the maximum and minimum function values of all

the points in the complex is less then a predescribed measure  .

Likewise, the complex has converged in the variable space if the maximum difference in all dimensions is less that a certain value

.

Likewise, the complex has converged in the variable space if the maximum difference in all dimensions is less that a certain value  , Thus

, Thus  and

and  constitutes a measure of the spread of the complex in function space and parameter space respectively.

constitutes a measure of the spread of the complex in function space and parameter space respectively.

(6)¶

(7)¶![{max[} {max}( x_{i,j} ) - {min}(x_{i,j} ) { ]} \leq {\epsilon}_v \;\;\; i= 1,2 \ldots k \\ j= 1,2 \ldots n](_images/math/46f08a1c1fa7626ea24c79177f14baf2b4e9610f.png)

As has been stated earlier, the complex is designed to handle constraints. Constraints in the form of limits on the design variables is handle by checking if the new point is within the variable limits. If not it is move to the feasible side of the limit. If the new points is violating any other constraint it is moved halfway towards the centroid.

4.2. Pseudo Code¶

The working principle of the complex method is here outlined using pseudo code.

Pseudo Code

| Generate Starting points

| Calculate objective function

| Evaluate constraints

| Identify the worst point

| While stop criteria is not met

| Calculate centroid

| Reflect worst point through centroid

| Make sure the new point is within the variable limits

| Calculate objective function for the new point

| Evaluate constraints

| Identify the worst point

| While the new point is the worst or a constraint is violated

| Move the new point towards the centroid

| Calculate the objective function

| Evaluate constraints

| end while

| Identify the worst point in the new complex

| Check stop criteria

| end while

| Output the optimal point

4.3. The Complex-RF Method¶

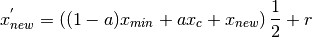

The pseudo code above describes the original Complex method as it was presented by Box. The method has some weaknesses. For one thing, if a local minimum is located at the centroid the method will keep on moving new points towards the centroid where the whole complex will collapse in one point [2]. In order to avoid this, the new point could gradually be moved towards the best point. Based on the equation (5) , the new point could now be expressed as

(8)¶

where  is the best point and

is the best point and

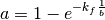

(9)¶

where  is the number of times the point has repeated as the worst and b is a constant that

here equals to 4. Thus the more times the point repeats as the worst the smaller a gets and

the new points is moved towards the best point since x min will have a large importance in

the calculation of

is the number of times the point has repeated as the worst and b is a constant that

here equals to 4. Thus the more times the point repeats as the worst the smaller a gets and

the new points is moved towards the best point since x min will have a large importance in

the calculation of  .

Another feature that could be added in order to make the method less prone to

collapse and lose dimensionality and to avoid getting stuck in local minima is to add

some randomness to it. This is accomplished by introducing a random noise vector r to

the new point in accordance to equation eq:ten

.

Another feature that could be added in order to make the method less prone to

collapse and lose dimensionality and to avoid getting stuck in local minima is to add

some randomness to it. This is accomplished by introducing a random noise vector r to

the new point in accordance to equation eq:ten

(10)¶

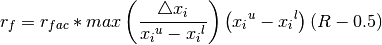

The random noise is calculated according to

(11)¶

where  is a randomization factor,

is a randomization factor,  and

and  the variable limits and

the variable limits and  represent the

spread in the i:th variable of the complex, and

represent the

spread in the i:th variable of the complex, and  is a random variable in the interval

[0.1]. This formulation implies that the noise added is a function of the convergence

is a random variable in the interval

[0.1]. This formulation implies that the noise added is a function of the convergence  , and the shape of the original design space, i.e. the variable limits.

Furthermore, the formulation makes it possible for the complex to maintain diversity and also regain lost

dimensionality.

, and the shape of the original design space, i.e. the variable limits.

Furthermore, the formulation makes it possible for the complex to maintain diversity and also regain lost

dimensionality.

Since the noise is a function of the maximum spread, perturbations could be added to dimensions in which the complex has already converged. This facilitates avoidance of local optima. The randomization factor thus makes the method more robust in finding the global optima to the cost of somewhat slower convergence. Experiments have shown that a randomization factor of 0.3 is a good compromise between convergence speed and performance.

It is also possible to include a forgetting factor,  , which ensures that the Complex is

made up predominantly with recent points. This is necessary if the objective function

varies over time. In that case, old objective function values become increasingly

unreliable and should be replaced by new ones. This is particularly true if the

optimization is to be used to optimize parameters in a real process. In this case there may

be drift in the parameters of the physical system. Introducing a forgetting factor has also

been found to improve the success rate in other situations as well.

, which ensures that the Complex is

made up predominantly with recent points. This is necessary if the objective function

varies over time. In that case, old objective function values become increasingly

unreliable and should be replaced by new ones. This is particularly true if the

optimization is to be used to optimize parameters in a real process. In this case there may

be drift in the parameters of the physical system. Introducing a forgetting factor has also

been found to improve the success rate in other situations as well.

One such situation is if the objective function is noisy, i.e. there are local variations in the objective function

between points close to each other in parameter space, or if the objective function has a

discrete nature with flat plateaus. The basic principal of the forgetting factor is to

continuously deteriorate objective function values The underlying mathematics of the

forgetting factor is described in detail in [6].

In [6], all parameters of the Complex algorithm are optimized in order to find the

parameter set that gives the best possible performance of the algorithm. It is then

concluded that

,

,

and

and

give a good performance of the algorithm.

The complex method has been applied to a wide range of problems such as physics, structural engineering, fluid power system design and aerospace

engineering.

give a good performance of the algorithm.

The complex method has been applied to a wide range of problems such as physics, structural engineering, fluid power system design and aerospace

engineering.

4.4. References¶

- Box, M.J., “A new method of constrained optimization and a comparison with other method”, Computer Journal, Volume 8, No. 1, pages 42-52, 1965.

- Guin J. A., “Modification of the Complex method of constraint optimization”, Computer Journal, Volume 10, pages 416-417, 1968.

- Spendley W., Hext G. R., and Himsworth F. R., “Sequential application of Simplex designs in optimisation and evolutionary operation”, Technometrics, Volume 4, pages 441-462, 1962

- Nelder J. A. and Mead R., “A simplex method for function minimization”, Computer Journal, Volume 7, pages 308-313, 1965

- Holland H. J., “Adaptation in Natural and Artificial Systems, an introductory analysis with application to biology, control and artificial intelligence”. Ann Arbor, The university of Michigan Press, 1975.

- Krus P. and Andersson J., “Optimizing Optimization for Design Optimization”, in Proceedings of ASME Design Automation Conference, Chicago, USA, September 2-6, 2003.

- Andersson J., “Multiobjective Optimization in Engineering Design - Application to Fluid Power Systems”, Doctoral thesis; Division of Fluid and Mechanical Engineering Systems, Department of Mechanical Engineering, Linköping University, Sweden, 2001

- Krus P., Ölvander J., “Performance Index and Meta Optimization of a Direct Search Optimization Method”, Engineering Optimization, Volume 45, Issue 10, pages 1167-1185, 2013.